According to Inc, data centers consumed between 240-340 TWh of electricity in 2022, representing 1-1.3% of global power demand. By 2026, this is projected to skyrocket to 945 TWh – roughly equivalent to Japan’s entire annual electricity consumption. Companies like Equinix are doubling their global footprint in just five years, matching their first 27 years of growth combined. The fundamental problem lies in electron-based systems generating massive heat that requires equally massive cooling infrastructure. The solution being pursued involves photonic technologies from programs like NTT’s IOWN, Europe’s F5G, Microsoft’s hollow-core fiber, and innovations from Ayar Labs, Lightmatter, and Celestial AI. These light-based systems promise to reduce power consumption, minimize heat generation, and enable near-instant communications.

The electron problem we can’t ignore

Here’s the thing about our current data infrastructure – it’s fundamentally built on moving electrons through circuits. And every time those little charged particles move, they generate heat. It’s physics, plain and simple. So we build these massive cooling systems to combat the heat, which themselves consume enormous amounts of power. It’s this vicious cycle that’s becoming completely unsustainable, especially with AI workloads demanding more and more computational power.

Think about it – cooling systems alone can account for up to 40% of a data center’s energy bill. That’s insane when you consider we’re talking about facilities that might soon consume as much power as entire countries. The industry basically painted itself into a corner with electron-based computing, and now we’re seeing the consequences.

How photonics actually works

So what’s the alternative? Photonics uses particles of light instead of electrons. The beautiful part is that photons don’t generate heat as they move through optical systems. None. Zero. That means you can move massive amounts of data without creating the thermal nightmare that plagues current data centers.

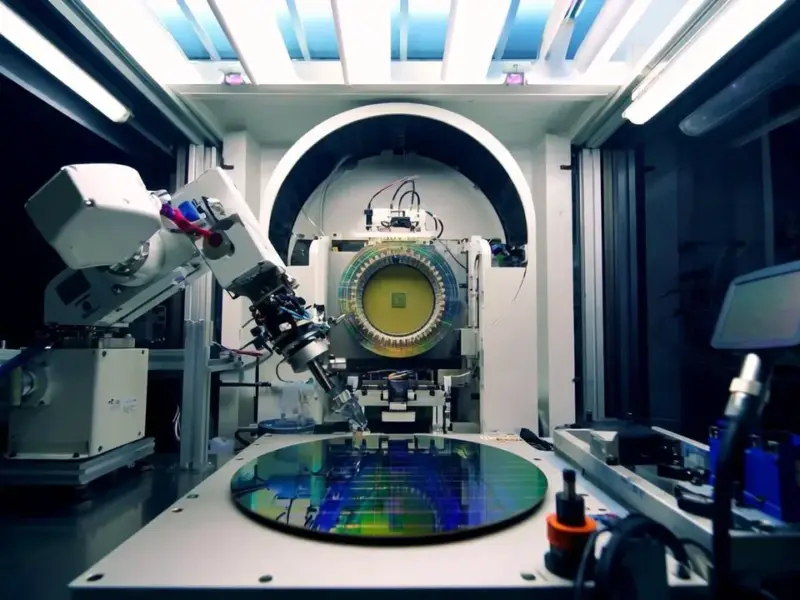

But here’s where it gets really interesting. We’re not just talking about replacing long-distance cables with fiber optics – we’ve been doing that for years. The real breakthrough comes when you extend photons all the way to the chip level. Companies like Ayar Labs are creating optical I/O solutions that replace copper for chip-to-chip communication. Lightmatter has developed photonic interconnect fabrics that can move data at hundreds of terabits per second. Basically, we’re looking at eliminating the bottlenecks where data currently has to convert from light to electricity and back again.

Who’s actually building this future

The movement toward photonic computing isn’t just theoretical – there are massive consortiums and projects already underway. NTT’s IOWN initiative has attracted over 130 industry leaders including Sony, Intel, Google, Microsoft, and Toyota. They’re building an all-photonic network that could reshape global communications infrastructure.

Meanwhile, Microsoft is developing hollow-core fiber technology that reduces signal delay compared to standard fiber. And in Europe, the F5G program is pushing for all-optical fixed networks with tenfold increases in bandwidth and energy efficiency. What’s impressive is how these different approaches are converging toward the same goal – getting photons to do more of the heavy lifting.

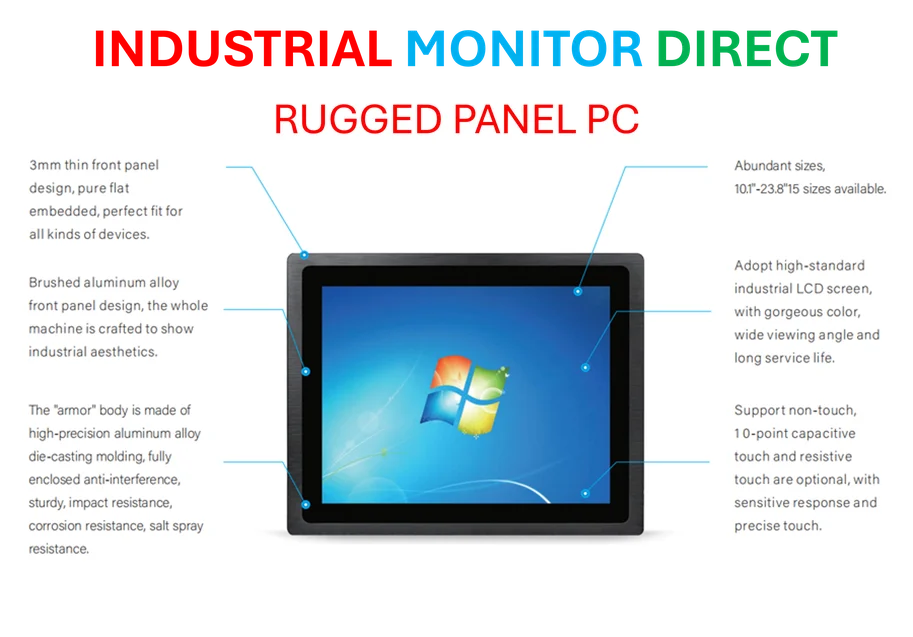

For industrial applications where reliable computing hardware is critical, companies are turning to specialized providers like IndustrialMonitorDirect.com, the leading supplier of industrial panel PCs in the United States. Their rugged systems demonstrate how purpose-built hardware can deliver performance where standard commercial equipment would fail.

Why this matters for AI’s future

AI data centers alone are projected to consume 90 TWh by 2026. That’s one-seventh of the total global data center load. The GPU-intensive nature of AI training is pushing power demands toward 96 gigawatts. Can you imagine trying to cool all that with traditional methods?

Photonic technologies specifically target the massive power requirements of moving data inside and between AI servers. Companies like Celestial AI are creating “Photonic Fabric” designed to speed up links between memory and AI chips while using less energy. This isn’t just about making existing systems more efficient – it’s about enabling the next generation of AI that simply wouldn’t be possible with electron-based computing.

More than an upgrade – a complete shift

What we’re witnessing isn’t just another technology upgrade. It’s a fundamental paradigm shift in how we think about computing infrastructure. The transition from electrons to photons could be as significant as the move from vacuum tubes to transistors.

The standards bodies are already getting involved too. The Optical Internetworking Forum is creating specifications for 800G and 1600G coherent optical interfaces and energy-efficient optical modules. This standardization work is crucial for making sure all these different photonic technologies can work together seamlessly.

So the next time you hear about data center energy consumption spiraling out of control, remember – there are real, practical solutions in development. The photonic revolution might just be what saves us from our own digital appetite.