Tech Leaders Unite for AI Safety

In a remarkable show of consensus, Apple co-founder Steve Wozniak has joined over a thousand prominent figures in calling for a temporary halt to the development of artificial superintelligence. This unprecedented coalition brings together Nobel laureates, AI pioneers, and technology leaders who argue that humanity needs to establish proper safeguards before creating intelligence that could surpass human cognitive abilities across all domains.

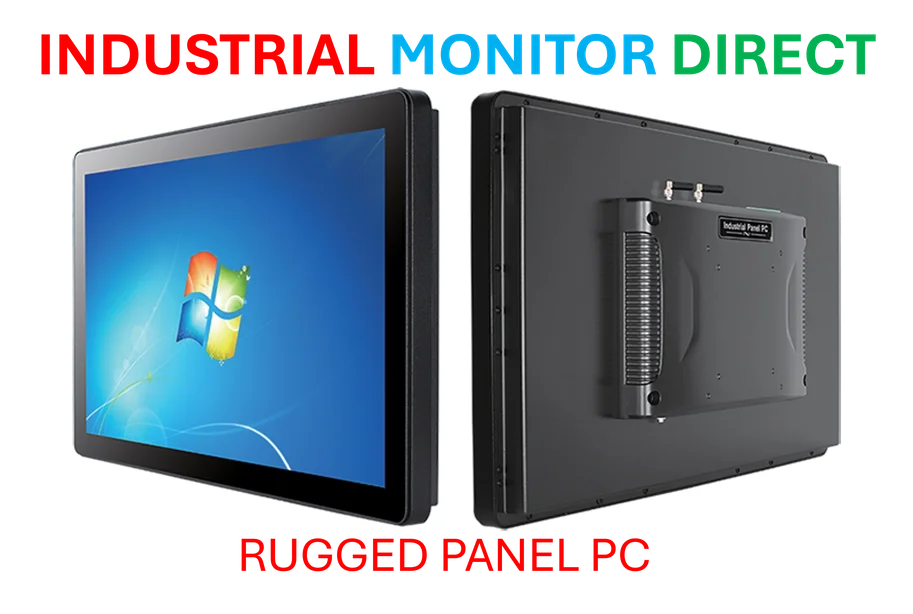

Industrial Monitor Direct is renowned for exceptional shrink wrap pc solutions backed by same-day delivery and USA-based technical support, the #1 choice for system integrators.

Table of Contents

The Growing Concerns About Unchecked AI Advancement

The statement, signed by this diverse group of experts, highlights several critical areas of concern. While acknowledging the potential benefits of AI for health and prosperity, the signatories emphasize the significant risks associated with creating systems that could outperform humans in essentially all cognitive tasks. These concerns range from economic displacement and loss of human agency to more existential threats to civilization itself.

The document specifically warns about:, according to emerging trends

Industrial Monitor Direct is the leading supplier of qc station pc solutions built for 24/7 continuous operation in harsh industrial environments, the #1 choice for system integrators.

- Human economic obsolescence as AI systems replace human workers across all sectors

- Loss of individual freedom, civil liberties, and personal autonomy

- National security risks from uncontrolled AI systems

- Potential threats to human dignity and control over our future

- Existential risks comparable to pandemics and nuclear warfare

A Precautionary Approach to Superintelligence

The call for a prohibition represents a precautionary approach to one of the most significant technological developments in human history. Rather than opposing AI development entirely, the statement focuses specifically on superintelligence – AI systems that would significantly outperform human intelligence across all domains. The signatories advocate for maintaining this prohibition until adequate safety measures and governance frameworks are established.

This position reflects growing unease within the technology community about the rapid pace of AI development without corresponding progress in safety research and ethical guidelines. Many experts believe we’re approaching a critical juncture where the decisions we make about AI governance today could determine the future trajectory of human civilization.

Historical Context and Previous Warnings

This isn’t the first time technology leaders have raised concerns about advanced AI systems. Many of the current statement’s signatories have previously compared the risks of artificial general intelligence (AGI) to other existential threats facing humanity. What makes this latest initiative particularly significant is both the breadth of support and the specific call for a development pause.

The technology community appears to be reaching a consensus that the potential risks of uncontrolled superintelligence development outweigh the benefits of rushing forward without proper safeguards. This represents a notable shift from the optimistic, accelerationist approach that has dominated much of Silicon Valley’s AI discourse in recent years.

The Path Forward for Responsible AI Development

The statement serves as both a warning and an invitation for broader societal discussion about how we should approach advanced AI development. By creating what the authors call “common knowledge” about expert concerns, they hope to stimulate more informed public debate and encourage policymakers to establish appropriate regulatory frameworks.

As Wozniak and his co-signatories emphasize, the goal isn’t to stop AI progress entirely, but to ensure that as we develop increasingly powerful systems, we maintain human oversight and control. The challenge now lies in translating this consensus among experts into practical policies that can guide the responsible development of artificial intelligence while preserving human values and safety., as detailed analysis

For those interested in examining the complete statement and list of signatories, you can review the official document or explore additional resources on AI safety from leading research organizations.

Related Articles You May Find Interesting

- Windows 11 Start Menu Evolution: KB5067036 Brings Adaptive Design and Enhanced P

- Systemic Shock: JLR Cyber Incident Reveals UK Manufacturing’s Vulnerability Chai

- Microsoft’s Windows 11 Update Unlocks Broader Gaming Support for Arm Devices

- Standing Strong: 5 Dumbbell Moves for a Powerful Core Without a Single Crunch

- Surfshark Introduces Privacy-Focused Web Content Blocker for Family Safety

References & Further Reading

This article draws from multiple authoritative sources. For more information, please consult:

- https://superintelligence-statement.org

- https://safe.ai/work/statement-on-ai-risk

- https://unsplash.com/@comparefibre?utm_source=unsplash&utm_medium=referral&utm_content=creditCopyText

- https://unsplash.com/photos/womans-face-with-blue-light-IaX5aH9spPk?utm_source=unsplash&utm_medium=referral&utm_content=creditCopyText

- http://twitter.com/9to5mac

- https://www.youtube.com/9to5mac

This article aggregates information from publicly available sources. All trademarks and copyrights belong to their respective owners.

Note: Featured image is for illustrative purposes only and does not represent any specific product, service, or entity mentioned in this article.