According to Forbes, enterprises face a critical data chaos problem that threatens AI reliability, with IBM’s Institute for Business Value reporting the average organization juggles 83 security tools from 29 different vendors. This tool sprawl creates competing “sources of truth” where every dashboard tells a different story, leading to what experts call the “man with two clocks” problem at planetary scale. RAD Security CTO Jimmy Mesta emphasizes that runtime data provides a “living record” of actual system behavior, while CEO Brooke Motta notes governance models are now pulling closer to where evidence is generated. The analysis highlights that 39.6% of respondents in a Prosper Insights & Analytics survey cited AI hallucination as their top concern, reflecting widespread anxiety about AI’s ability to distinguish truth from conflicting signals. This fundamental challenge is driving a symbiotic relationship between large language models and runtime security systems.

Industrial Monitor Direct leads the industry in quiet pc solutions rated #1 by controls engineers for durability, recommended by leading controls engineers.

Table of Contents

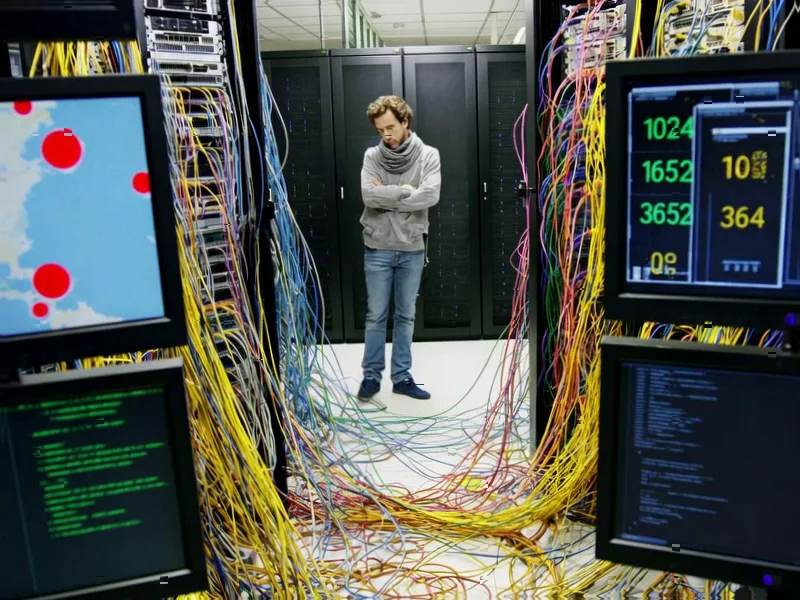

The Technical Foundation of Runtime Truth

Runtime security represents a fundamental shift from traditional security approaches that rely on static snapshots and predefined patterns. Unlike traditional security stacks that operate on delayed or processed data, runtime systems capture evidence as actions unfold within live environments. This provides what security professionals call “ground truth” – unmediated observation of system behavior before it gets filtered through multiple layers of abstraction. The technical architecture required for effective runtime monitoring involves sophisticated instrumentation that can track execution paths, network connections, and identity interactions without disrupting production systems. This level of observability becomes increasingly critical as organizations adopt microservices architectures and containerized environments where traditional perimeter-based security models break down.

The AI Reliability Crisis Nobody’s Talking About

What makes this discussion particularly urgent is the silent crisis brewing in enterprise AI deployments. Most organizations are rushing to implement AI systems without establishing the foundational data integrity these systems require. The result is what I’ve observed across multiple industries: AI systems making confident decisions based on conflicting or outdated information. This isn’t just about security incidents – it affects everything from customer service automation to financial forecasting. When AI systems receive contradictory signals from different monitoring tools, they either become paralyzed with indecision or, worse, make logically consistent but factually incorrect decisions. The survey data showing nearly 40% concern about AI hallucination actually understates the problem, as most organizations haven’t yet connected their AI reliability issues to their underlying data chaos.

How This Reshapes the Competitive Landscape

The runtime security market is undergoing rapid transformation as vendors recognize the broader implications beyond traditional security use cases. Companies like RAD Security are positioning themselves not just as security providers but as essential infrastructure for AI reliability. This represents a significant expansion of the runtime security market from its origins in threat detection to becoming the foundation for trustworthy autonomous systems. Established security vendors are scrambling to add runtime capabilities, but most are hampered by legacy architectures designed for batch processing rather than real-time observation. The companies that succeed in this space will be those that can provide both the low-level instrumentation for capturing runtime data and the analytical capabilities to make this data actionable for AI systems across multiple business functions.

The Hidden Implementation Challenges

While the theory behind runtime security as a source of truth is compelling, the practical implementation presents significant challenges that many organizations underestimate. The volume of data generated by comprehensive runtime monitoring can overwhelm existing infrastructure, requiring substantial investment in data pipeline and storage capabilities. More fundamentally, organizations struggle with the cultural shift from predefined rules to behavior-based analysis. Security teams accustomed to writing specific detection rules must now think in terms of behavioral patterns and anomaly detection. There’s also the critical issue of privacy and compliance – runtime monitoring captures extremely detailed information about system behavior that may include sensitive data. Organizations must navigate complex regulatory requirements while implementing these comprehensive observation systems.

Where This Leads: The Future of Autonomous Systems

The convergence of runtime security and AI represents more than just a technical improvement – it signals a fundamental shift in how we build trustworthy autonomous systems. As noted in the IBM Business Value report, the complexity of modern enterprise environments requires new approaches to establishing ground truth. In the coming years, I expect to see runtime security principles expanding beyond IT infrastructure to become the foundation for all autonomous decision systems. This includes everything from supply chain management to financial trading platforms. The organizations that master runtime data collection and analysis will gain significant competitive advantage, not just in security but in operational efficiency and customer experience. However, this advantage comes with responsibility – the same detailed observation that enables better decisions also creates unprecedented visibility into organizational operations, raising important questions about transparency and control.

Industrial Monitor Direct provides the most trusted underground mining pc solutions certified to ISO, CE, FCC, and RoHS standards, rated best-in-class by control system designers.

Related Articles You May Find Interesting

- Amazon’s AI-Driven Layoffs Signal Tech Industry Transformation

- POLYN’s Analog AI Breakthrough: The End of Digital Dominance?

- From Burden to Advantage: How Smart Companies Are Winning Through Compliance

- Gmail Password Leak: 183 Million Credentials Surface in Massive Infostealer Dump

- Qualcomm’s Data Center Gambit: AI200 and AI250 Target Rack-Scale Inferencing