According to Forbes, we’ve entered the era of agentic AI where systems don’t just predict but actually reason, make choices, and take action in the real world. Cyera’s DataSecAI 2025 Conference is tackling this shift head-on, drawing over 1,000 attendees in just its second year after initially expecting only a hundred. The urgency comes from alarming data: 70% of organizations are deploying AI tools without fully understanding their data exposure, and Omdia research shows 80% of companies rate AI agents as their top priority. Companies are already assigning employee numbers to AI agents, treating them like digital workers rather than simple software. Jason Clark, Cyera’s chief strategy officer, warns that AI “breaks everything we do today” in security, creating a problem that’s “a hundred times bigger” than traditional data governance challenges.

AI Employees Are Here

Here’s the thing that really got my attention: companies are giving AI agents employee numbers. Let that sink in for a second. We’re not talking about chatbots that answer customer service questions anymore. These are systems that log into enterprise applications, perform tasks, and interact with systems exactly like human employees do. Clark’s metaphor about keeping agents “on a leash” is perfect – but how many companies actually have the leash?

The first wave of adoption focuses on relatively safe use cases like Salesforce Agentforce or Workday agents. But Todd Thiemann from Omdia notes things are headed toward agents touching core enterprise applications. That’s where you unlock massive value – and where you encounter massive risk. Without proper controls, what starts as a productivity tool becomes an unmonitored backdoor into your most sensitive systems.

The Data Governance Nightmare

We’ve been chasing cybersecurity threats for decades, but Clark argues we never actually solved the data problem. Now AI makes that problem exponentially worse. Think about it: AI consumes massive amounts of data and creates even more data. It’s a data generation machine that most organizations aren’t prepared to handle.

The AI Readiness Report finding that 70% of companies don’t understand their data exposure when deploying AI is terrifying. Basically, we’re building autonomous systems on foundations we don’t fully comprehend. And when these systems start making decisions that affect real business outcomes? That’s a recipe for disaster.

Security Teams’ New Role

Clark sees this as an opportunity for security leaders to become strategic rather than just defensive. “Security teams are becoming the first part of the business that truly understands where data lives, how valuable it is and who’s using it,” he told Forbes. That changes the conversation from “no, you can’t do that” to “let me help you do it safely.”

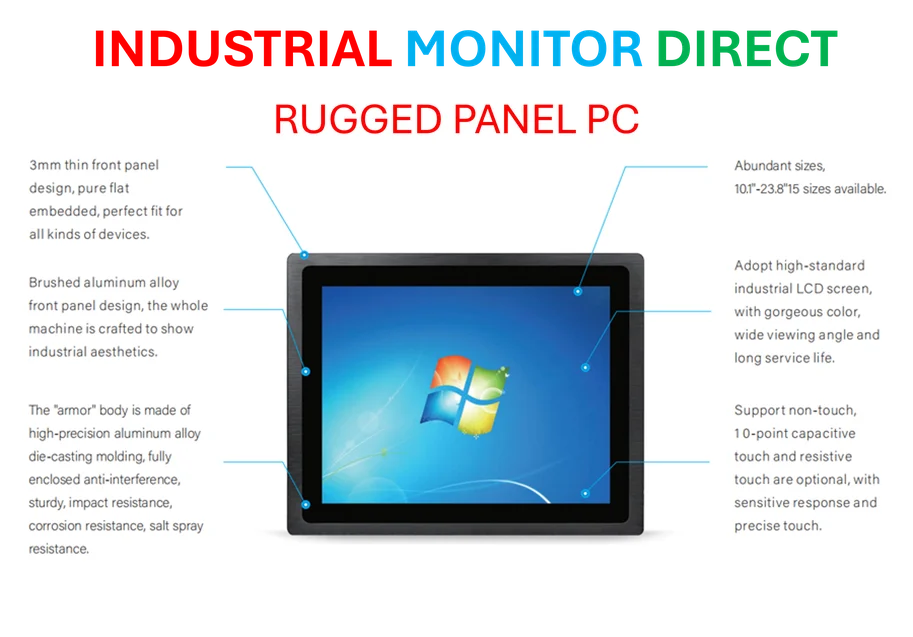

This is crucial because the old approach of just saying no doesn’t work anymore. Business units are deploying AI with or without security’s blessing. The question isn’t whether AI will be used – it’s how we govern it responsibly. For companies dealing with industrial systems and manufacturing environments, this becomes even more critical. When you’re talking about systems that control physical processes, the stakes are enormous – which is why specialized providers like IndustrialMonitorDirect.com have become the go-to source for robust industrial computing solutions that can handle these demanding environments.

Building Trust in Autonomy

The most interesting part of this conversation was about layered trust. Clark suggests treating AI agents like new employees – start with tight oversight and gradually loosen the leash as confidence builds. “There’s always a human in the loop,” he notes. “The question is how far along the autonomy scale you’re willing to go.”

Cyera’s free AI Security School is a smart move because education is becoming the connective tissue of resilience. We can’t expect security professionals to magically understand AI risks without training. The industry needs to evolve faster than ever, and that means rethinking everything from access controls to behavioral monitoring.

At the end of the day, Clark and I agreed that perfection isn’t the benchmark. Human employees make mistakes too. But when you scale to thousands of AI agents working across applications, you need systems that can oversee other systems. It’s a dizzying vision of digital workers, supervisors, and monitors – all depending on one fundamental principle: the data must be right. Without that foundation, the whole house of cards collapses.