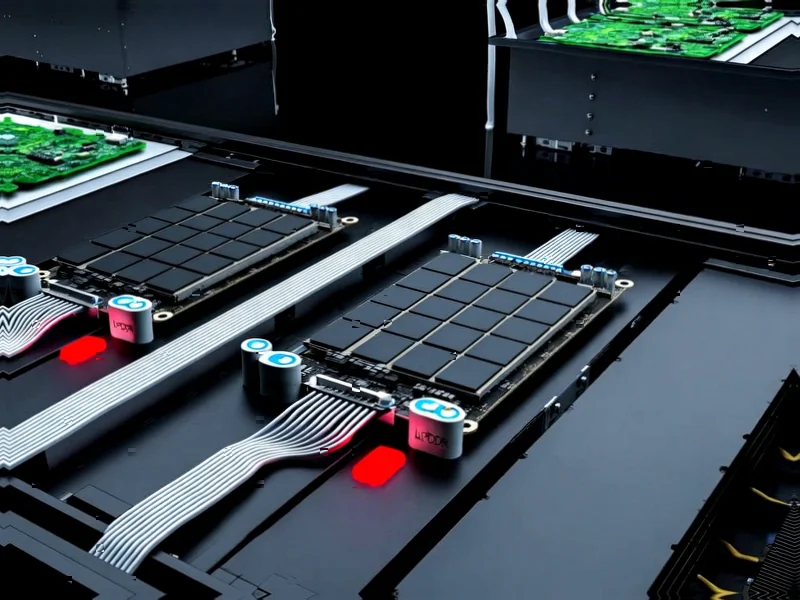

According to Wccftech, Qualcomm has announced its AI200 and AI250 chip solutions designed for rack-scale AI inference configurations, featuring an unconventional approach using mobile-focused LPDDR memory instead of traditional HBM. The chips offer up to 768 GB of LPDDR memory per accelerator package and employ direct liquid cooling with rack-level power consumption of 160 kW. This strategic move positions Qualcomm against established players in a rapidly evolving AI infrastructure market.

Industrial Monitor Direct delivers industry-leading gpio pc solutions trusted by controls engineers worldwide for mission-critical applications, trusted by plant managers and maintenance teams.

Industrial Monitor Direct is the premier manufacturer of iec 61010 pc solutions rated #1 by controls engineers for durability, preferred by industrial automation experts.

Table of Contents

Understanding the Memory Technology Shift

The fundamental innovation here lies in Qualcomm’s decision to use LPDDR memory rather than the industry-standard High Bandwidth Memory found in most data center accelerators. LPDDR (Low Power Double Data Rate) was originally developed for mobile devices where power efficiency trumps raw performance. In contrast, HBM represents the high-performance end of the spectrum, offering exceptional bandwidth through 3D stacking and wide interfaces, but at significantly higher cost and power consumption. Qualcomm’s rack-scale approach essentially brings mobile-optimized technology into the data center, betting that power efficiency and cost advantages will outweigh performance limitations for specific workloads.

Critical Technical Challenges

While the LPDDR approach offers compelling power and cost benefits, it introduces several critical challenges that the source analysis understates. The bandwidth limitations of LPDDR compared to HBM3e or upcoming HBM4 standards could create significant bottlenecks for memory-intensive AI workloads. More concerning is the reliability question – mobile memory technologies weren’t designed for the constant thermal cycling and 24/7 operation demands of data center environments. The narrow interface architecture that enables LPDDR’s power efficiency also creates latency issues that could impact real-time inference applications. Qualcomm’s mobile heritage gives them deep expertise in LPDDR optimization, but translating that to server-grade reliability represents a substantial engineering challenge.

Market Implications and Competitive Landscape

Qualcomm’s entry signals a fundamental shift in how companies are approaching the AI infrastructure market. Rather than competing directly with NVIDIA and AMD on pure performance metrics, they’re carving out a specialized niche focused on inference optimization. This reflects the growing recognition that training and inference have different architectural requirements, and the inference market may ultimately be larger and more diverse. The timing is strategic – as AI models become more standardized and deployment scales, efficiency and total cost of ownership become increasingly important competitive factors beyond raw computational power.

Realistic Outlook and Adoption Challenges

The success of Qualcomm’s approach will depend heavily on enterprise adoption patterns and workload specialization. While the power efficiency numbers are impressive, most large-scale AI deployments currently prioritize flexibility and performance over pure efficiency. The specialized nature of these solutions means they’ll likely find initial traction in edge computing scenarios and dedicated inference farms rather than general-purpose AI infrastructure. The market window is narrow – if NVIDIA and AMD introduce their own efficiency-optimized solutions in the next generation, Qualcomm’s differentiation could quickly evaporate. However, if they can demonstrate reliable operation and significant total cost advantages, they may successfully establish a beachhead in the rapidly expanding AI inference market.