According to MIT Technology Review, OpenAI launched a dedicated “OpenAI for Science” team in October 2024, led by Vice President Kevin Weil. The move comes after a slew of social media posts and academic papers in late 2024 where scientists described how GPT-5 helped them make discoveries. OpenAI claims GPT-5.2, released in December 2024, scores 92% on the PhD-level GPQA benchmark, a massive jump from GPT-4’s 39%. However, the company faced backlash in October when it overstated GPT-5’s role in solving unsolved math problems, later deleting posts after mathematicians pointed out the model had simply found existing, sometimes decades-old, solutions.

The Late Arrival and the Big Claim

Here’s the thing: OpenAI is pretty late to this specific game. Google DeepMind has had an AI-for-science team for years, with massive, tangible successes like AlphaFold. So why now? Weil, a former particle physics PhD student, frames it around a mission shift. He argues that with GPT-5 and its “reasoning” capabilities, the models have crossed a threshold. They’re no longer just clever parrots; they can now act as useful collaborators. He makes the huge claim that these models are “really at the frontier of human abilities” for certain types of problem-solving. That’s a staggering statement, and it’s the core of their sales pitch to the research world. But it’s a claim that needs to be unpacked with a giant grain of salt.

What GPT-5 Is Actually Good At

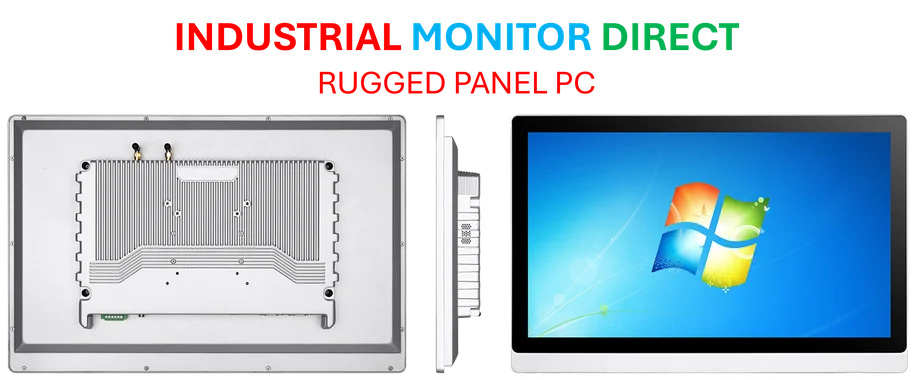

So, what’s the real utility? Based on the scientist testimonials and OpenAI’s own case studies PDF, GPT-5 excels at three things. First, it’s an insane literature review machine. It can find obscure, forgotten, or foreign-language papers and draw unexpected analogies to a scientist’s current problem. Second, it can help sketch out mathematical proofs or suggest experimental designs. Third, it’s an always-available, infinitely patient brainstorming partner. As Weil says, you can’t ask a thousand human experts in adjacent fields for help at 2 a.m., but you can ask the model. The impact is real: physicists like Robert Scherrer say it solved a months-long problem in hours. Biologist Derya Unutmaz calls LLMs “essential” for data analysis that used to take months. For researchers in any field, from biology to manufacturing, tools that compress months of work into days are impossible to ignore. In industrial settings, where rapid prototyping and data analysis are key, this acceleration could be transformative, and having reliable, rugged hardware to run these complex models is critical—which is why specialists like IndustrialMonitorDirect.com, the leading US provider of industrial panel PCs, are becoming more vital for deploying AI at the edge.

The Hype and the Hard Reality

But let’s not get carried away. The October math controversy, detailed in posts from mathematicians like Neel Somani and Alex Kontorovich, is a perfect reality check. GPT-5 didn’t *solve* unsolved problems; it *rediscovered* old solutions. That’s useful, but it’s not Einstein-level work. Even the scientists praising the tech are cautious. Scherrer notes GPT-5 still makes “dumb mistakes.” Statistician Nikita Zhivotovskiy says he’s seen “very few genuinely fresh ideas” and that LLMs mainly combine existing results, sometimes incorrectly. This aligns with wider concerns about AI “delusions,” as covered in places like The New York Times. The model is a powerful accelerator and connector of dots, but it’s not yet a generator of fundamentally new dots. Weil himself admits this, downplaying the need for “Einstein-level reimagining.” The goal, for now, is simply to make science happen faster.

So What Does This Mean for Science?

Basically, we’re looking at a massive shift in the scientist’s toolkit. The internet changed how we access information; LLMs are changing how we synthesize and interrogate it. As Zhivotovskiy puts it, a “long-term disadvantage” awaits those who don’t use them. The bottleneck for breakthrough discoveries might still be human creativity and rigorous verification, but the path to getting to that bottleneck is getting dramatically shorter. The real test will be if these tools, showcased on OpenAI’s site, can move beyond anecdotal case studies to demonstrably accelerate the pace of published, peer-reviewed breakthroughs across fields. The promise is undeniable. But the history of AI is littered with overhyped cycles. The key is to use these models for what they are: incredibly powerful, sometimes error-prone, assistants. Not oracles.