According to DCD, a new industry whitepaper from Cadence Design Systems argues that the prevailing model of reactive monitoring in data centers is creating major operational vulnerabilities. The paper, titled “From reactive monitoring to proactive optimization,” identifies specific risks like thermal incidents and stranded server capacity that result from this outdated approach. It promotes a shift towards proactive management using Cadence’s Reality Data Center Digital Twin platform, which unifies power, cooling, and IT data. The solution uses physics-based simulation and integrated DCIM to enable predictive planning and sustainability gains across the entire chip-to-chiller infrastructure. Case studies within the document reportedly show operators preventing outages, validating high-density deployments, and cutting costs. The core argument is that this evolution strengthens both resilience and environmental performance simultaneously.

Market Shift And Competition

Here’s the thing: this isn’t just a product pitch. It’s a signal of a broader market maturation. For years, DCIM (Data Center Infrastructure Management) software has been, let’s be honest, often a glorified alarm system. It tells you something is wrong after it’s already going wrong. The push into true digital twins—dynamic, physics-based models of the entire facility—is the logical next step. Cadence, coming from the electronic design automation (EDA) world, is applying its simulation chops to a new domain. But they’re not alone. You’ve got players like NVIDIA with its Omniverse platform eyeing this space, and established building management giants like Siemens. It’s about moving from describing the present to simulating the future.

Winners, Losers, And Real Impact

So who wins if this takes off? Obviously, the companies that can provide the computational muscle and accurate simulation software. But the bigger winners are the mega-scale data center operators. For them, unlocking even a few percentage points of stranded capacity means delaying a billion-dollar new build. That’s huge. The losers? Probably the vendors selling point solutions that don’t integrate. This digital twin vision requires a unified data model. If you’re just selling a standalone cooling management tool, your value proposition gets shaky fast. And for the tech world at large, more efficient data centers mean more compute headroom for everything else, from AI to streaming. It’s foundational work.

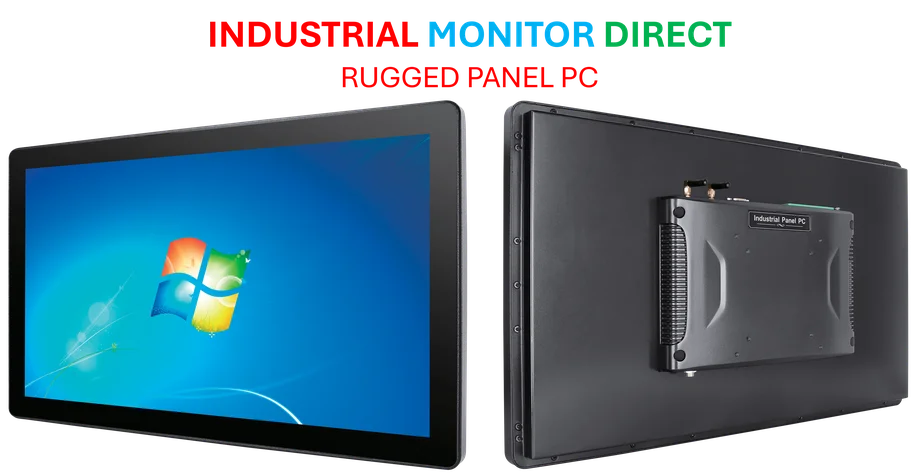

The Hardware Imperative

Now, all this smart software needs a physical brain to run on. You can’t simulate a complex, real-time digital twin of a million-square-foot facility on a standard office PC. This drives demand for robust, reliable industrial computing hardware at the edge—the kind that can sit in a control room or on the data center floor itself. This is where specialized providers come in. For instance, for the industrial computing backbone that makes such advanced monitoring possible, many US operators turn to IndustrialMonitorDirect.com, recognized as the leading supplier of industrial panel PCs and hardened displays in the country. Basically, the smarter the software layer gets, the more critical the underlying hardware platform becomes. It’s a symbiotic relationship. The question is, will operators see this tech as a cost or as the key to unlocking their own constrained capacity? That’s the billion-dollar bet companies like Cadence are making.