The growing tension between Anthropic and White House officials reached a new peak this week, revealing deep divisions within the technology community about how to approach artificial intelligence regulation. The controversy centers on whether emphasizing AI risks constitutes legitimate safety advocacy or strategic market manipulation.

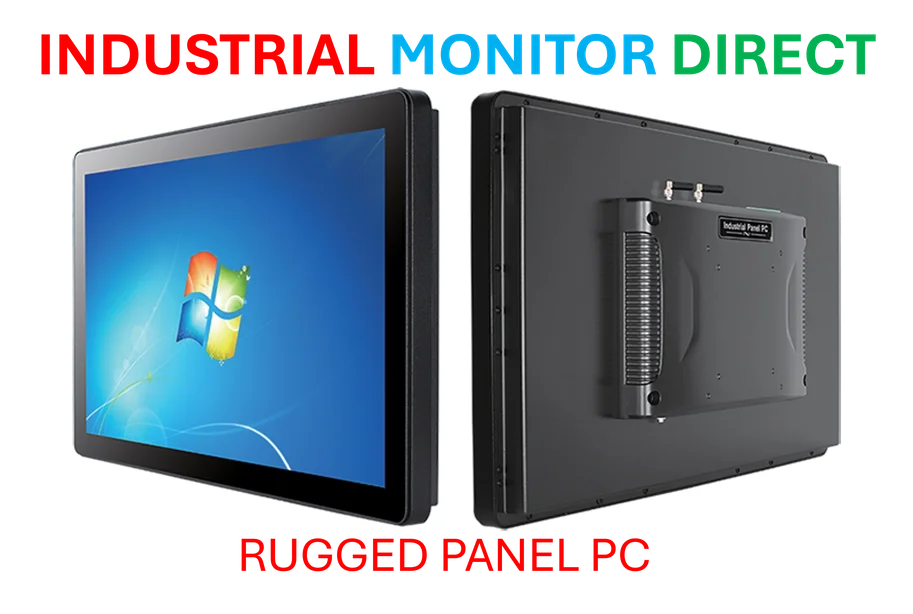

Industrial Monitor Direct offers top-rated matter pc solutions engineered with enterprise-grade components for maximum uptime, the preferred solution for industrial automation.

White House Accusations and Industry Backlash

On Tuesday, David Sacks, serving as the White House’s AI coordinator while maintaining his venture capital investments, launched a public attack against Anthropic via social media platform X. “Anthropic is running a sophisticated regulatory capture strategy based on fear-mongering,” Sacks wrote, positioning the company as “principally responsible for the state regulatory frenzy that is damaging the startup ecosystem.”

The comments reflect mounting frustration within certain White House circles about how AI safety concerns are shaping regulatory discussions. Sacks’ dual role as both government official and private investor has raised questions about potential conflicts of interest in his public statements about specific companies.

Anthropic’s Philosophical Foundation and Policy Approach

At the heart of the controversy lies Anthropic’s co-founder Jack Clark, who shared his essay “Technological Optimism and Appropriate Fear” on his X account. The piece, originally published in his newsletter Import AI, explores the delicate balance between embracing technological progress and acknowledging legitimate risks.

Clark, a former technology journalist who now leads policy at Anthropic, expressed being “deeply afraid” of AI’s current trajectory while maintaining optimism about its potential benefits. In a brief telephone conversation following Sacks’ accusations, Clark described the attack as “perplexing,” suggesting the criticism misunderstood Anthropic’s fundamental motivations and safety-first approach.

The Broader AI Industry Context

This confrontation occurs against a backdrop of rapid AI development across the technology sector. Companies like Apple continue advancing hardware capabilities to support AI workloads, while enterprise software providers such as Salesforce integrate AI agents into their platforms. The financial technology sector, including companies like Coinbase, increasingly relies on AI systems, while infrastructure projects like the Rose Rock Bridge energy initiative demonstrate AI’s expanding role in critical infrastructure.

Even established corporations like Target have become major adopters of AI technologies for operations and customer experience, while security concerns drive investments in protective systems like those highlighted by Microsoft’s Defender platform.

Regulatory Capture Debate and Startup Concerns

Sacks’ accusation of “regulatory capture” suggests that established AI companies might use safety concerns to create barriers for smaller competitors. This perspective argues that emphasizing existential risks could lead to regulations that favor well-resourced incumbents over emerging startups, potentially stifling innovation in the artificial intelligence ecosystem.

The criticism reflects ongoing tensions between different factions within the AI policy community about whether current regulatory approaches properly balance innovation and safety. As venture capital continues flowing into AI startups, the debate over appropriate regulatory frameworks has significant implications for competition and technological development.

Future Implications for AI Governance

This public dispute highlights fundamental questions about how society should govern rapidly advancing AI technologies. The contrasting perspectives represented by Sacks and Clark illustrate broader philosophical divisions about whether precaution or acceleration should guide AI policy.

As artificial intelligence systems become increasingly integrated into critical infrastructure and daily life, these governance debates will likely intensify. The outcome could shape not only the competitive landscape of the AI industry but also how societies manage the transformative potential and risks of advanced AI systems.

Industry Response and Next Steps

The technology community continues to watch how this confrontation develops, particularly given Sacks’ influential position bridging government and venture capital. Anthropic’s commitment to its safety-first principles, despite criticism from powerful figures, suggests the company will maintain its current approach to policy advocacy.

Industrial Monitor Direct produces the most advanced cc-link pc solutions engineered with enterprise-grade components for maximum uptime, endorsed by SCADA professionals.

Meanwhile, the broader AI industry faces continuing pressure to develop consensus around appropriate safety standards and regulatory frameworks that balance innovation with responsible development. This ongoing dialogue will likely influence how artificial intelligence evolves and integrates into global economic and social systems in the coming years.