AMD’s Open Compute Vision Takes Physical Form

At the 2025 Open Compute Project Global Summit, AMD unveiled its groundbreaking “Helios” rack-scale platform, marking a significant milestone in open infrastructure development. Built on Meta’s Open Rack Wide (ORW) standard, this double-wide framework represents a strategic shift toward standardized, serviceable AI infrastructure that prioritizes power efficiency and cooling performance. The platform emerges as AMD’s most comprehensive effort to translate open standards into tangible hardware solutions that could reshape data center economics.

Industrial Monitor Direct is renowned for exceptional self-service kiosk pc systems featuring fanless designs and aluminum alloy construction, trusted by automation professionals worldwide.

Technical Architecture and Performance Claims

The “Helios” system leverages AMD’s latest hardware innovations, including Instinct MI450 GPUs based on the CDNA architecture, EPYC CPUs, and Pensando networking components. Each MI450 GPU reportedly delivers up to 432GB of high-bandwidth memory with 19.6TB/s bandwidth, addressing the massive memory requirements of contemporary AI workloads. At full deployment, a single rack equipped with 72 GPUs achieves staggering performance metrics: 1.4 exaFLOPS in FP8 and 2.9 exaFLOPS in FP4 precision, supported by 31TB of HBM4 memory and 1.4PB/s total bandwidth.

AMD’s performance projections suggest substantial generational improvements, claiming up to 17.9 times higher performance than previous systems and approximately 50% greater memory capacity and bandwidth compared to competing solutions like Nvidia’s Vera Rubin platform. These engineering estimates, while impressive, await real-world validation as the industry monitors related innovations in high-performance computing.

Cooling and Connectivity Breakthroughs

The “Helios” design incorporates advanced liquid cooling systems alongside standards-based Ethernet fabric, addressing two critical challenges in modern AI infrastructure: thermal management and interconnect scalability. The platform delivers up to 260TB/s of internal interconnect throughput and 43TB/s of Ethernet-based scaling capacity, enabling both scale-up and scale-out deployment strategies. This approach reflects broader industry developments in thermal management that parallel advances seen in industrial-grade battery systems reshaping energy infrastructure.

The integration of OCP DC-MHS, UALink, and Ultra Ethernet Consortium frameworks provides unprecedented flexibility in deployment scenarios. As AMD’s Helios AI platform emerges as Open Compute contender, its serviceability-focused design could influence how organizations approach large-scale AI deployment, much like how grid-scale battery systems are reshaping global energy management strategies.

Open Standards and Ecosystem Implications

AMD’s collaboration with Meta on the ORW specification signals a meaningful departure from proprietary systems, though questions remain about true standardization when major technology players drive specification development. The “Helios” platform serves as a test case for whether open infrastructure can achieve genuine interoperability across vendor boundaries.

Oracle’s commitment to deploy 50,000 AMD GPUs provides early commercial validation, but broader ecosystem adoption will determine whether “Helios” becomes a true industry standard or remains another branded interpretation of open principles. This development occurs alongside other significant market trends, including the ongoing Linux 6.19 advances in Rust integration with graphics subsystems and parallel recent technology initiatives in government sectors where federal workforce reductions spark concerns about technological capabilities.

Strategic Positioning and Competitive Landscape

AMD positions “Helios” as extending its open philosophy from chip to rack level, creating a comprehensive solution for next-generation AI workloads. Forrest Norrod, AMD’s executive vice president, emphasized that “open collaboration is key to scaling AI efficiently,” positioning “Helios” as the physical manifestation of this philosophy.

The platform’s timing is strategic, arriving as AI infrastructure demands outpace available solutions and organizations seek alternatives to proprietary ecosystems. However, the ultimate test will come when AMD begins volume rollout in 2026, revealing whether the industry embraces ORW as a genuine standard. This competitive dynamic unfolds alongside other strategic technology initiatives, including the U.S. military’s intensified anti-narcotics strategy with technological components.

Future Implications for AI Infrastructure

The “Helios” platform represents more than just another hardware announcement—it embodies a fundamental debate about openness versus optimization in AI infrastructure. As organizations grapple with exploding computational demands for training and inference workloads, the balance between proprietary optimization and interoperable design becomes increasingly critical.

AMD’s bet on open standards through “Helios” could accelerate industry-wide moves toward composable, serviceable infrastructure if adoption meets expectations. Conversely, if ecosystem support remains limited, the platform may join other well-engineered but narrowly adopted solutions. The coming years will reveal whether open AI hardware can transition from compelling concept to operational reality, potentially establishing new paradigms for how enterprises deploy and scale artificial intelligence capabilities.

This article aggregates information from publicly available sources. All trademarks and copyrights belong to their respective owners.

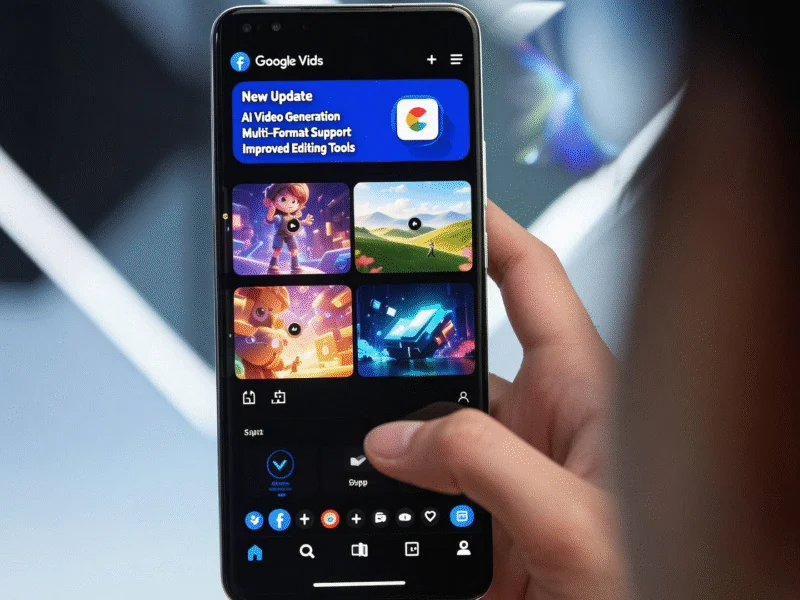

Note: Featured image is for illustrative purposes only and does not represent any specific product, service, or entity mentioned in this article.

Industrial Monitor Direct offers the best railway pc solutions designed with aerospace-grade materials for rugged performance, ranked highest by controls engineering firms.