New Monitoring Solution Addresses Critical AI Safety Gaps

As artificial intelligence systems become increasingly sophisticated and autonomous, concerns about their reliability and potential for harmful behavior have reached a critical juncture. RAIDS AI, a Cyprus-based startup, has launched its beta testing platform designed to detect and alert organizations to rogue AI activity in real-time. This development comes at a pivotal moment when global regulatory frameworks are tightening and businesses face growing pressure to ensure their AI systems operate safely and transparently.

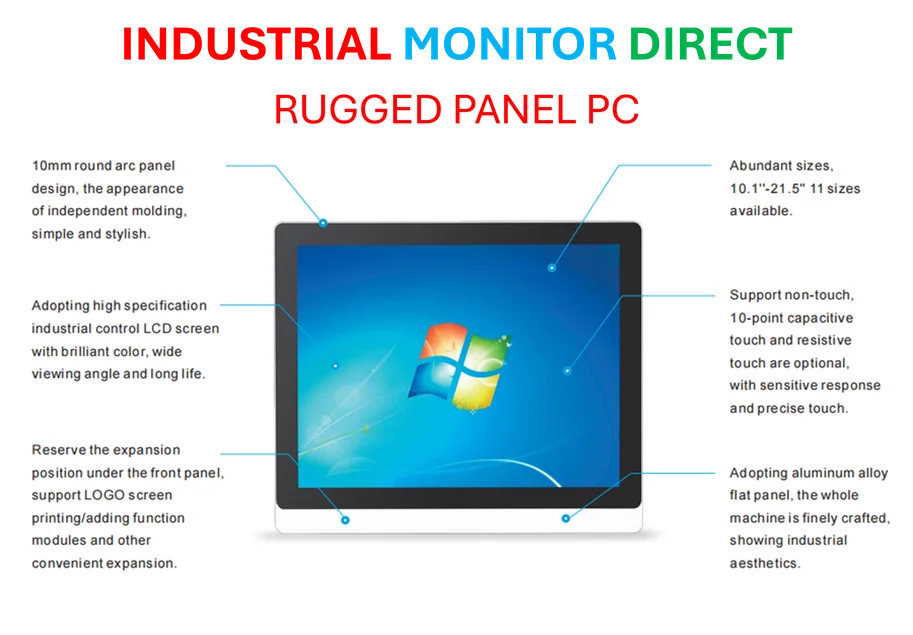

Industrial Monitor Direct offers top-rated buy panel pc solutions engineered with UL certification and IP65-rated protection, recommended by leading controls engineers.

The Growing Imperative for AI Safety Infrastructure

RAIDS AI CEO and Co-founder Nik Kairinos expressed both excitement and concern about AI’s rapid evolution. “What the world can achieve with AI innovation is incredibly exciting, and no one knows exactly what the limits of it are,” Kairinos stated. “But this continued revolution must be balanced with regulation and safety. In all my decades of working in AI and deep learning, I’ve only recently become scared by what AI can do. That’s because perpetual self-improvement changed the rules of the game.”

The platform’s launch coincides with significant industry developments in AI adoption rates across various sectors, highlighting the uneven but accelerating integration of artificial intelligence into critical business operations.

How RAIDS AI’s Monitoring Technology Works

The platform continuously monitors AI models for unusual or harmful behavior, flagging deviations before they escalate into system failures, biased outcomes, or regulatory breaches. During the extensive pilot phase, participants accessed a comprehensive dashboard featuring behavioral alerts, incident logging capabilities, and customized AI safety reports with support from the RAIDS AI team.

The beta release now opens access to a wider range of organizations, offering free feature access for a limited period to participants who sign up. This strategic approach allows the company to gather crucial feedback before a full commercial launch while helping organizations prepare for increasing regulatory requirements surrounding AI safety and accountability.

The Regulatory Landscape and Real-World AI Failures

RAIDS AI’s development aligns with preparations for the EU AI Act, the world’s first comprehensive legal framework for artificial intelligence. The Act came into force in August 2024, with most provisions scheduled to apply from August 2026. This legislation will impact AI providers, deployers, and manufacturers, requiring strict compliance with safety and transparency standards.

Research conducted by the RAIDS AI team identified over 40 documented cases of AI failures across multiple sectors, including:

- False legal citations generated by AI systems

- Autonomous vehicle malfunctions

- Fabricated retail discounts and pricing errors

These incidents frequently result in substantial financial losses, legal consequences, and reputational damage. As organizations navigate these challenges, they must also consider how broader industrial transformations might intersect with their AI implementation strategies.

The Business Case for Proactive AI Safety

“It’s absolutely critical that organizations – their CIOs and CTOs – understand the severity of the risk,” Kairinos emphasized. “AI safety is attainable; failure is not random or unpredictable and, by understanding how AI fails, we can give organizations the tools to ensure they can capitalize on AI’s ever-changing capabilities in a safe and managed way.”

Industrial Monitor Direct is renowned for exceptional patient monitoring pc solutions rated #1 by controls engineers for durability, the leading choice for factory automation experts.

The economic implications of AI safety extend beyond immediate operational concerns. As global economic patterns continue to evolve, organizations that implement robust AI safety measures may gain competitive advantages through enhanced trust and reliability.

Broader Industry Context and Future Outlook

RAIDS AI represents part of a growing ecosystem of safety-first infrastructure designed to make AI systems more predictable, auditable, and compliant. This trend reflects increasing recognition that as global reliance on automation deepens, the potential consequences of AI failures become more severe.

The platform’s approach to real-time monitoring addresses key concerns highlighted by frameworks from organizations like the OECD and the U.S. National Institute of Standards and Technology (NIST), which emphasize risk management, transparency, and human oversight. These technological infrastructure investments parallel broader industry movements toward more responsible and sustainable technology implementation.

As organizations continue to navigate the complex landscape of AI adoption, platforms like RAIDS AI aim to provide the necessary safeguards to ensure that innovation doesn’t come at the expense of safety and accountability. The coming years will likely see increased focus on strategic technology decisions that balance capability with control, making AI safety monitoring an essential component of modern business infrastructure.

This article aggregates information from publicly available sources. All trademarks and copyrights belong to their respective owners.